Why You Should Implement a Content + Document Management System at your Organization

Does your firm rely on the development of proposals and responses to Requests for Proposals (RFPs) and Requests for Information (RFIs) to get ahead? If so, the effective management of documents and content should be considered. Implementing a comprehensive Document and Content Management System (CMS) across your entire organization offers multifaceted

advantages, addressing critical aspects of information handling, collaboration, and

compliance.

Document Storage — A centralized repository serves as a cornerstone for efficient document management. Storing a myriad of documents, ranging from proposals to contracts, in a secure and easily accessible location ensures that the organization can manage and retrieve critical information effortlessly.

For example, consider the scenario where a government contractor is simultaneously working on multiple projects with various teams. A unified document storage system eliminates the need for scattered and siloed storage solutions, fostering a more organized and streamlined approach to document management.

Access Control — In the context of government contracting, where confidentiality and data security are paramount, controlling access to sensitive documents is critical. A robust CMS allows organizations to implement role-based access control, ensuring that only authorized personnel have access to specific documents.

Imagine a scenario where a proposal contains sensitive pricing information or proprietary methodologies. With access control mechanisms in place, the organization can restrict access to this information to only those individuals directly involved in the proposal development, minimizing the risk of unauthorized access.

Audit Trails — Transparent and accountable processes are essential, especially in government contracting, where compliance with regulations and adherence to established procedures are non-negotiable. An effective CMS provides detailed audit trails, documenting every change made to documents. Are you considering ISO or CMMI certification? Audits will be crucial.

As another example, consider a situation where a proposal undergoes multiple revisions. The ability to trace and review each modification ensures transparency and accountability, crucial for compliance with regulatory requirements and internal governance standards.

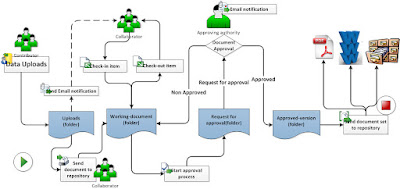

Workflow Automation— In the realm of proposal development, workflows often involve multiple stages, requiring collaboration across different teams and departments. Workflow automation within a CMS streamlines these processes, reducing manual effort and enhancing overall efficiency.

For instance, envision a scenario where a proposal undergoes sequential reviews by subject matter experts, legal teams, and project managers. With workflow automation, the CMS can automatically route the document through the necessary stages, notifying relevant stakeholders at each step, thereby expediting the review process.

Collaboration and Integration — Effective collaboration is the backbone of successful proposal development. A CMS facilitates seamless collaboration by providing a centralized platform for team members to work on documents collectively. Integration capabilities with other tools and platforms further enhance collaboration by eliminating data silos.

Consider a government contractor collaborating with external consultants and internal teams on a complex proposal. With a CMS, all contributors can access and edit the document in real-time, fostering a collaborative environment that accelerates the proposal development lifecycle.

Disaster Recovery — The significance of disaster recovery in safeguarding critical documents cannot be overstated. Unforeseen events, ranging from hardware failures to natural disasters, can pose a threat to valuable information. A CMS ensures robust backup and recovery capabilities, minimizing the risk of data loss.

Imagine a scenario where a government contractor's office experiences a sudden data server failure. Without proper backup mechanisms, crucial proposal documents and historical information could be lost. A CMS with disaster recovery capabilities mitigates such risks, ensuring business continuity.

Workflow and Process Automation — Automation of routine tasks and processes is a key driver of operational efficiency. In the context of government contracting, where repetitive tasks are inherent in the proposal development lifecycle, a CMS that supports business process automation becomes indispensable.

Consider the process of document approval within a government contractor's organization. A CMS with business process automation can streamline the approval workflow, automatically routing documents for approval based on predefined rules, thereby reducing delays and bottlenecks.

Conclusion:

At Bluedog, we seethe implementation of a robust Document and Content Management System organization-wide as not just a technological enhancement —but a strategic imperative. From enhancing document security and compliance to fostering collaboration and streamlining workflows, the benefits are multifaceted. Our clients, government contractors, stand to gain a competitive edge by embracing a CMS that aligns with the unique challenges and requirements of their industry, ultimately contributing to more efficient and successful proposal development and overall business operations.